Overview

In the past year, discussions around artificial intelligence (AI) have increased at a meteoric rate. As ChatGPT has become more popular, and as other new AI products have emerged, registered investment advisers, as well as the financial services industry, have raced to figure out what AI means for them. And for many, the idea of AI raises more questions than answers: What is AI? How can it benefit our firm? How can employees utilize AI? Should employees even be allowed to use AI? What are the associated risks?

From business opportunities to regulatory considerations to associated risk, there is a lot to consider.

This guide is intended to provide investment advisers with a solid understanding of AI, regulatory considerations, and tips for how they can begin to use AI—including how to update their compliance programs.

What is AI?

AI is a machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations, or decisions influencing real or virtual environments.1 Artificial intelligence systems use machine and human-based inputs to:

- Perceive real and virtual environments;

- Abstract such perceptions into models through analysis in an automated manner; and

- Use model interference to formulate options for information or action.

AI encompasses machine learning (ML), which is a set of techniques that can be used to train AI algorithms to improve performance on a task based on data.2

Although it seems as if AI only recently emerged, given the hype in the media, it has actually been around for quite some time. The recent popularity around AI is a result of the increased processing power with new computers to analyze large amounts of data very quickly. However, many automated customer service platforms have been using machine learning for years.

How Does AI Work?

The key to all machine learning is called training, formally known as Natural Language Processing (NLP). NLP starts by breaking down text into small pieces called tokens. In simple terms, NLP takes human language and converts it into data that a computer can understand. The program will then search for patterns in the data it has been given to achieve the instructions required. Humans then provide feedback to help educate the computer on which patterns are accurate. For instance, when you enter a prompt in a search bar and the computer provides an answer along with a question such as, “Was this information helpful?”, that’s an example of the how the computer is learning and refining its patterns. The result is a trained AI model, based on the data and feedback provided.

Popular chatbots, such as ChatGPT, are a type of AI model called Large Language Models (LLMs). LLMs are trained on huge volumes of text from the internet, which is why giant tech companies like Microsoft, Google, and Meta are in this space. It can be helpful to think of a chatbot as a parrot. It can mimic and repeat words it has heard, without having a full understanding of their meaning.

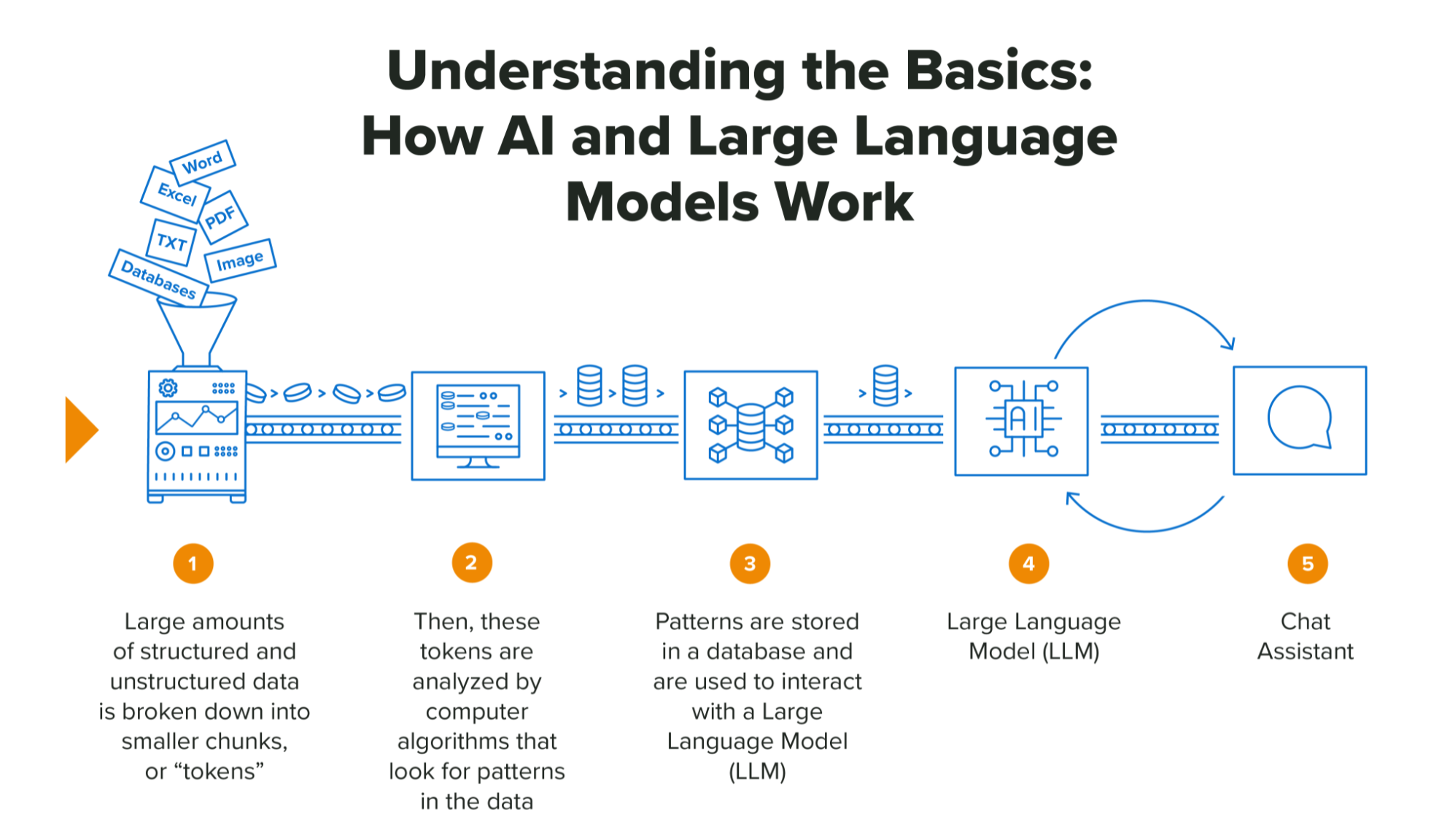

The graphic below demonstrates how an LLM works. It takes large amounts of unstructured and structured data (such as Word and Excel files) and breaks them down into smaller chunks called tokens. These tokens are analyzed to look for patterns, and the patterns are stored in a database that are used to interact with a LLM to answer questions about your files.

Understanding the Basics:

How AI and Large Language Models Work